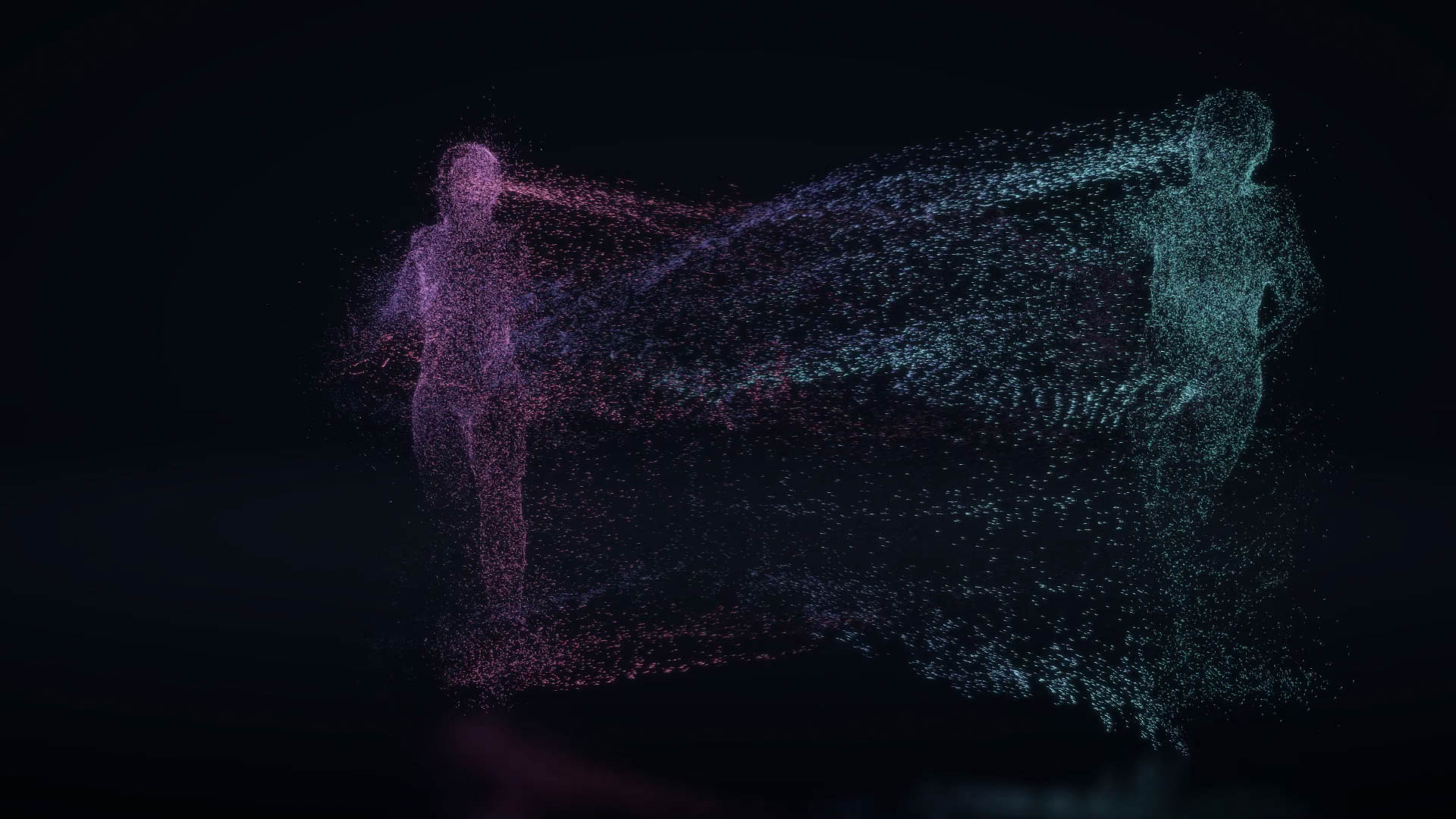

Body RemiXer connects bodies through movement. It is an experiential projection based Virtual Reality installation that explores novel forms of embodied interaction between multiple participants where their bodies mix into a shared embodied representation producing a playful interaction that aims to support the feeling of connection and self-transcendence. Body RemiXer is both an artistic installation and a research platform for investigation the notions of relation of our bodies with our sense of “self”.

This project was created for IAT848 (Mediated, Virtual and Augmented Reality) class by John Desnoyers-Stewart and Katerina Stepanova. Body RemiXer partially conceptually and technically builds on previous work of each team member: John Desnoyers-Stewart’s Transcending Perception installation (an exploration into virtual, mixed, and expanded reality), Stepanova’s AWE project (designing awe-inspiring VR experiences for fostering the feelings of transcendence and global interconnectedness) and their collective JeL project in development (connecting people through breathing synchronization).

A video of the project can be found here and a repository of the code can be found on GitHub.

Outline

Background

Our perception of body and movement are tightly related to our perception of “self”. According to embodied cognition theories, the way we interact with the world and how we experience it through the senses and motor activity of our physical body determines how we understand the world and ourselves (as part of the world). The way we see our body affects how our sense of identity is formed. For instance, when we perceive ourselves to look, behave, or move similarly to another person, we feel affiliation with them—that we are a part of the same group and that we are connected (this is even true for preverbal infants who prefer characters who are making same choices of food (Hamlin et al. 2013) or even wear same coloured t-shirt). A similar principle is evident in studies showing that moving in synchrony with others will lead to stronger feeling of connection and willingness to cooperate (Marsh et al., 2009). These effects also hold for interacting with virtual characters: people perceive to be more connected to avatars moving in sync with them (Tarr, Slater, & Cohen, 2018) and, a bit alarmingly, but indecisive participants will be biased towards virtual political candidates that are modified to look similar to the participant and mimic the participants behaviour without them noticing it (Bailenson, Iyengar, & Yee, 2011).

We perceive our body through combined sensory input through multiple senses (multisensory integration) and how the perceptions respond to our intentions to act—when these inputs are aligned we perceive the ownership of that body. This alignment of multiple sensory inputs lead to a famous rubber hand illusion that can be induced through a simple physical set up or in virtual reality. In this illusion participants see a fake hand that is being stroked (or other form of tactile stimulation) or/and movement in coordination with the real hand, that creating the illusion for the participant that this fake hand is a part of their body. Virtual reality combined with full body tracking allows the creation of systems in which movement of a participant is mapped to movement of a virtual representation of their body, thus providing an opportunity to experience body ownership of that body. Notably, this body doesn’t even need to be seen in the same place as the physical body of the user, as long as sensory motor inputs are aligned.

Several Virtual Reality projects have been developed that explore the relationship between the virtual body and the cognition of the body owner. Several of these studies look at the effects of embodying someone else’s body: e.g. of a different race (Banakou, Hanumanthu, & Slater, 2016), gender, age (Yee & Bailenson, 2006), etc. which could include body swapping with another person (Oliveira et al., 2016) or embodying an avatar. The effect of changing one’s behaviour (including behaviour towards the category of people one is embodying in Virtual Reality) has been called Proteus Effect (Yee, Bailenson, & Ducheneaut, 2009), and has even been applied to non-human characters such as animals, like cows (Ahn et al., 2016), or even corals (Markowitz, Laha, Perone, Pea, & Bailenson, 2018). This also includes exhibiting traits that are attributed to the embodied character, e.g. a pro-sociality of superman (Rosenberg, Baughman, & Bailenson, 2013). Virtual Reality was also used to induce an out of body experiences through encouraging a body ownership of a virtual avatar and then removing one’s view point from that virtual body (Bourdin, Barberia, Oliva, & Slater, 2017), which can be viewed as an example of a self-transcendent experience.

Thus, so far in previous research we have seen that:

- Through multisensory integration participants can perceive a virtual body as their own (body ownership illusion).

- Embodying a virtual body different from your own can have an effect on the participant of making them attribute the traits of that virtual character to themselves and increasing the connection towards the group that the virtual body belongs to as shown through reduced stereotyping and increased positive attitudes and behaviors.

- Virtual body swapping with another person can lead the participants to develop more understanding, empathy, and connection with the other person whose body they embodied.

- It’s potentially possible to have a self-transcendent experience in VR through inducing body ownership of a virtual body and then transcending the boundaries of that virtual body. Creating a virtual, but literally self-transcendent experience.

More detailed discussion of some of the related studies and projects can be seen on the project plan page.

While body swapping and out of body experiences have been attempted in VR, to the best of our knowledge, an experience that would combine them both and develop a gradual transition between the states (owning a virtual body, transcending it, connecting with another body) hasn’t been explored.

If we can swap our body with another person in VR to gain an understanding of their perspective can we embody one single body with another person at the same time? And, if we can, what kind of effect would it have on us? Can we form a stronger feeling of connection to others through a literal (but virtual) experience of unity with them?

In the real world such experiences are rare at best. Each of us had an experience of unity and shared embodiment with our mothers while in the womb, which was arguably a profound experience of deep connection, but that is not an experience we can recreate between people and is too complex to be used for understanding the idea of shared embodiment and to be used to develop guidelines for the design of positive technology. Another real world example of shared embodiment is siamese twins, who are rare and possibly because of the interest in this phenomenon from medical perspective, have not received much scientific attention from psychologists investigating psychological connections formed between the twins as a result of sharing body.

In a less literal sense, “shared embodiment“ can be discussed with examples of socially distributed cognition where a team as a unit is operating one system (e.g. a boat) (Hollan, Hutchins, & Kirsh, 2000), thus all members of the team in a way could potentially have an experience of embodying the boat. This experiences can create a feeling of unity and connection between all participants, which could be attributed to the process of collaboration or to synchronous movement (e.g. in a rowing boat), that can both support the feeling of connection. What would be an interesting question here for the purpose of our project, is whether the team members that are operating the boat have an experience of “being” the boat.

In the Body RemiXer project we rely on the previous research of VR application of virtual body ownership, body swapping and out of body experiences to create a new platform for exploring the potential of VR to create experiences challenging our preconceptions about the relationship between our body, its boundaries and the sense of self, and providing new experiences impossible in the physical reality that could provide the environment for experience of self-transcendence and connection. This project explores how the feeling of shared embodiment can be created and what effects it may have on participants experiencing it and their relation to each other.

System Implementation

The development of Body RemiXer followed an iterative agile development process to allow insight throughout the development process to inform the result. The initial requirements and planned implementation were loosely set enabling new tools and ideas to be integrated at each iteration. Components were mocked up quickly as prototypes to verify their implementation and allow for an early understanding of their function and aesthetics. This allowed us to confront assumptions made in the initial requirements and to develop a flexible, continuously improving concept of the final product.

An iterative approach was essential to integrating the many components required for this system to function while retaining the creative and reflexive capacity of artistic practice. This not only allowed us to rapidly test and debug at each step, but also to integrate unexpected results that sometimes arose. From prior experience we knew that we should be able to control a rigged mesh using Kinect skeletal tracking, and to generate a particle system from that mesh, but beyond this the system had to be able to combine and overlay bodies in compelling ways that might evoke particular feeling of connection through movement. We used an animated mesh to simulate the particle system results before the Kinect skeletal tracking was finished or when we didn’t have access to the Kinect. As such, we were able to experiment with various particle system configurations and inputs. Simultaneously, we used models which would highlight imperfections in the tracking to ensure those imperfections would be minimized before integrating the body tracking and particle systems. In this way each component was able to grow steadily towards the realized implementation while informing other components.

The code is available on GitHub at https://github.com/jdesnoyers/Body_RemiXer.

Hardware

Body RemiXer uses a Kinect V2 to track the movement of up to 6 bodies in a 0.5-5 m range and projects imagery onto two perpendicular projections up to 6 x 3.4 m in size. The projections and Kinect are connected to a single computer equipped with a NVIDIA GeForce 970 graphics card. Two additional Kinect V2s were purchased for this project as backups and for us to use from home without disturbing the primary setup in the Black Box. The venue used, the Black Box, required considerable preparation to ensure consistent, reliable, and safe operation. We began by decluttering the space and storing unused equipment. We then set up the system by routing power and HDMI cables through the ceiling trusses, hanging a projection screen, tightening another, and safely suspending the projectors in position. The Kinect’s position is manually calibrated relative to the screens and floor—while it is capable of detecting the floor plane, the black dance floor in the black box is not visible to its infrared camera. Figure 1 shows the layout for the development installation.

System Integration

Body RemiXer was developed in Unity 2019.1, mostly while this version was still in Beta, with the final version released on April 16, 2019. This allowed us to take advantage of a number of new and upcoming (still in beta or preview) features such as Unity’s new High Definition Render Pipeline (HDRP) and Visual Effects Graph (VFX Graph). These features were essential to realizing our vision for Body RemiXer as they provided the ability to create more aesthetically satisfying visuals with a level of customization not previously available.

This also significantly complicated the development as the HDRP and VFX Graph are both still in preview and lack documentation and testing. For example, we ran into problems with the GL.InvertCulling function and altering the projection matrix as these features seem to have changed. As a result, we could not properly control the camera to create a reflection as desired and had to resort to mirroring the image using the projector itself. In addition, VR will not be fully supported for the HDRP until Unity 2019.2 so we opted to focus on projection based interaction. We hope to correct these issues in future iterations as Unity moves HDRP from preview to fully implemented. We have also started setting up the project to be run on two networked computers to allow for the use of two HMDs (and potentially 2 Kinects), but we put this implementation on hold until we can get at a single HMD working first with the next unity version.

The full system implementation is shown in Figure 2, starting with movement as input at the Kinect, being processed through a variety of scripts and assets in Unity, and output on the right as projections. The details of each component are described in the following sections.

Kinect Body Tracking

Body RemiXer was built on a Kinect skeleton tracking to mesh mapping system which John Desnoyers-Stewart previously developed for his MFA installation Transcending Perception. In that system, the tracked bodies were mapped to rigged meshes which were used to generate particles using Keijiro Takahashi’s Skinner particle system and were linked to PureData based instruments which generated sound based on the position, velocity, and acceleration of the hands.

For Body RemiXer the tracking was significantly improved to ensure that the bodies could be tracked as consistently and reliably as possible. This was essential as the remixing operations would amplify any problems with the tracking and we wanted to show a closer representation of the body. The tracking system was also improved to work with rigged meshes produced using the Adobe Fuse platform to allow for a more rapid production models. This was primarily accomplished by aligning the Kinect joint data with the rigged mesh’s joint transforms.

The Kinect SDK 2.0 and Kinect Unity SDK were used to connect the incoming Kinect data stream to Unity. The script BodySourceManager.cs was taken from the Kinect Unity SDK with minimal modifications and KinectBodyTracker.cs was mostly reused from Transcending Perception. These take the incoming joint data stream from the Kinect, filter it, and convert it into joint transforms which can be used by Unity to position the joints of a rigged mesh.

BodyRemixerController.cs is the main script and does most of the work in mapping joints into the remixed bodies. This script reuses two functions from Transcending Perception: CreateMeshBody() and UpdateMeshBodyObject(). UpdateMeshBodyObject() was improved to work with rigged meshes created in fuse and to allow for rotation only, position only, or combined position/rotation control of the rigged mesh. This script handles mapping the joint data to the controlled meshes and creating and deleting those meshes and game objects as necessary. It keeps track of the bodies in a set of dictionaries which map between an ID set by the Kinect and the main body Unity Game Object as well as the array of joints.

BodyRemixerController.cs allows for the selection between 5 modes: Exchange (or off), Swap, Average, Exquisite Corpse, or Shiva. Those 5 modes define the mapping of 3 mesh bodies: (1) The original mesh body—a direct representation of the immersant’s body; (2) the local remixer body—a locally mapped remixed mesh overlaid onto each body; and in some cases, (3) a “third-person” body which is shared between all immersants and fixed in place on one of the projections. The implementation of each mode is described below with further details about the experiential results in the User Experience Design section.

- Exchange: In exchange mode the remixer bodies are disabled and only the original mesh body is used. Tracked bodies are paired up where possible into a dictionary of pairs which is used to exchange particles between the mesh bodies. The third-person body is not used in this mode.

- Swap: In swap mode tracked bodies are once again paired up. In this case, the paired body is mapped to the local remixer body, superimposing each body onto the other and allowing the immersant to see their partner’s body compared to their own in the reflected projection. The local rotations and positions of each joint other than the spine joints are updated directly from the partner’s body. The third-person body is not used in this mode either.

- Average: In average mode the local rotations and positions of each joint are averaged and superimposed onto each body. This allows each immersant to see themselves in direct comparison with an average of each body. The third-person body is also used to display the average alone, allowing the shared control of another body by the immersants. A script obtained from wiki.unity3d.com: Math3D.cs was used for the complicated operation of averaging an indeterminate array of quaternions as outlined in a NASA research paper on the subject (Markley et al., 2007). This supporting script functions well when joint rotations are similar, but when they near opposing each other the average rotation becomes unstable and sometimes results in strange orientations of the averaged limbs.

- Exquisite Corpse: In exquisite corpse mode each immersant is given control over part of the remixed body. For example: when two players are present, one controls the upper body while the other controls the lower body; with four players, each player controls a limb (left arm, right arm, left leg, right leg) and so on. Once again the local remixer body superimposes the shared body over the user’s own. A third-person body is also displayed on the second projection to show the result. Particles are emitted from the immersant’s body to the local shared body and then from the local shared body to the third-person body.

- Shiva: With Shiva mode the immersant is given control of one of many overlaid bodies in the second projection. Each mesh body is overlaid onto the third-person body shown in the second projection creating a figure which seems to have many arms and legs like a hindu god. Particles emitted from the immersant’s body are attracted towards their representative Shiva body.

In future iterations BodyRemixerController will be separated into two meshes: MeshBodyController.cs and RemixerBodyController.cs to allow for reuse in other projects and to clarify the code. RemixModeChanger.cs is a simple script which advances through each of the remixer modes automatically or on pressing the ‘+’ or ‘-‘ key. RemixerVfxControl.cs is controlled by BodyRemixerController.cs and handles activating and initializing the VFX systems, setting source and target meshes for the particle system and managing particle colours within each body GameObject.

There are also a number of scripts which perform minor roles and were originally developed forTranscending Perception.

Minor scripts:

- JointCollection.cs – allows for quick access to joint GameObjects as array when creating new rigged meshes from a prefab.

- ScreenActivator.cs – Activates second and third monitor allowing for multiscreen projection.

- CalibrationControl.cs – Allows for calibration of projector positions and Kinect position during runtime as well as saving and loading calibration settings and checking the framerate.

- FreezeRotation.cs – Freezes rotation of a transform. Used to support a feature in CalibrationControl.cs.

Unused scripts:

- ReflectionTracker.cs – tracks average position of tracked bodies’ heads and adjusts camera projection matrix to simulate a mirror. Incompatible with HDRP but will be modified in future iterations to regain high-quality mirror projection.

- InvertCulling.cs -inverts culling in camera to accommodate reflected projection matrix. Incompatible with HDRP but will be re-implemented in future.

Skinned Meshes in VFX Graph

The VFX Graph is Unity’s GPU based particle system currently in development and available as a preview since Unity 2018.3. While documentation is currently limited, Unity has provided some basic documentation on their GitHub and has released a number of examples to demonstrate potential uses. Despite this limited documentation, the VFX Graph allows for robust and highly customizable particle systems with thousands or even millions of particles depending on the GPU. It far exceeds the previous in-engine capability of Unity’s CPU based Shruiken Particle System and uses a node based editor for more intuitive particle system manipulation. We chose this system over the highly praised PopcornFX for its tighter integration with Unity, the ability to edit in real-time in the Unity editor, and because it was available at no additional cost.

While the Unity VFX Graph has a lot to offer for a preview, it is currently lacking any direct way of integrating skinned meshes, instead requiring meshes to be pre-baked which would prevent the mesh from updating at runtime. Fortunately this is an area of considerable interest for the Unity developer community, so several packages already exist on GitHub to translate skinned meshes into VFX Graph readable textures in real time. We used Keijiro Takahashi’s SMRVFX to convert the vertices of our skinned meshes to position and velocity texture maps used to spawn and attract particles. These work well for precisely generating and targeting particles, but do not result in a sufficiently dynamic outcome. To make the particles more lively while conforming to the mesh more tightly we used Aman Tiwari’s MeshToSDF. This system converts a mesh to a signed distance field, which can be used in VFX Graph to attract particles to “conform to a signed distance field” producing a more dynamic and consistent result. MeshToSDF unfortunately had a major memory leak which continuously generated and orphaned baked meshes (one 100,000 vertex mesh per frame); however, as it caused significant performance issues it was easy to identify and we have since corrected the issue and submitted a pull request to apply it to Tiwari’s GitHub repository.

Model Development

The skinned meshes used in Body RemiXer were developed using a combination of Adobe Fuse, Mixamo, and Blender. While Fuse is capable of producing a wide variety of forms, those forms are based off either a male or female base meshes and tends to produce sexually dimorphic and sometimes stereotypical bodies which can easily be distinguished as one or the other. For Body RemiXer we wanted to produce an androgynous mesh that would evoke qualities of both and neither sex simultaneously, something which the dimorphic controls of Fuse could not accomplish. In addition we needed to modify the rigged mesh to conform to the Kinect skeleton which lacks one of the spinal joints and offsets several of the joints slightly from the Fuse default.

To accomplish this consistently we began by modifying the Fuse base meshes in Blender to be more androgynous, adjusted the model in fuse, auto-rigged in Mixamo and then adjusted the rigged mesh in Blender before importing into Unity. There were also normally hidden internal meshes (Figure 3) of the eyes and mouth as well as higher concentrations of vertices around the eyes and ears which produced disturbing forms and an excess of particles. The process required significant effort through trial and error to determine the correct set of steps to obtain the desired result. The resulting process is as follows:

- Modify Fuse default mesh in Blender to allow for body shapes outside of the range which Fuse allows to produce result as shown in figure 3.

- Export from Blender as Wavefront .obj file and import into Fuse.

- Fine tune and customize model in Fuse .

- Export to Mixamo for autorigging

- Turn off facial blendshapes (keeps mesh simpler)

- Set to two-chain fingers (fits Kinect skeleton better)

- Download rigged Collada (.dae) file (Using the .fbx file’s weight values for each joint cause folding in the joints).

- Import into Blender with settings shown in figure 4 below.

- Edit mesh to remove mouth and hidden portions of eyes (shown in figure 5 below).

- Edit mesh to simplify eyes and ears (excessive concentration of vertices results in excess particles originating from these areas). Balanced vertex counts can be obtained through remesh or decimate.

- Clean up mis-grouped vertices: some in the feet and abdomen assigned to the head or unassigned become stretched when rig is manipulated.

- Adjust rig to match Kinect (figure 6):

- Combine Spine1 and Spine2 bones.

- Raise Spine2 end to align with the Kinect’s Spine_Shoulder joint position.

- Align neck bone with center of neck.

- Align head joint with center of head.

- Lower Foot joints into heel to align with the Kinect’s “flat-footedness.”

- Export as .fbx file (direct blender import fails for some reason) and import into Unity project.

Figure 3. Androgynous mesh prepared for Fuse

Figure 4. Blender Collada import settings from Mixamo

Figure 5. Eye and mouth meshes removed from body

Figure 6. Re-rigged skeleton for use with Kinect V2

VFX Graph Particle System

In addition to the aforementioned scripts and model development, Unity’s VFX Graph was used to develop the particle systems used in Body RemiXer. This node based platform allowed for the complex yet efficient and widely customizable design of particle systems. We began by looking through some of Unity example VFX Graphs to understand the implementation and then started with the position example VFX Graph in Keijiro Takahashi’s

SMRVFX. We stripped it down to what was needed for our system and began to build it up from there. The particles use the built-in “sparkle” 2D sprite texture which can be stretched along its axis to produce a shooting star like effect or scaled down to produce small specks.

Through an iterative process we experimented with different combinations of parameters and operators to produce the desired output. Several parameters, including source and target textures, colors, and positions are exposed in the Unity editor to facilitate quick adjustment and connection to scripts and other GameObjects as shown in Figure 7. The operation of the resulting VFX Graph shown in figure 8, “Particles-Attract.vfx” is described below.

Spawn & Initialize

The particle spawn rate is affected by the number of tracked bodies to stabilize the program as more bodies (and therefore particle systems) are added. A spawn rate of 12000 particles per second with a capacity of 24000 particles was arrived at through trial and error. With a particle lifetime of 5-10 seconds this produces a short burst on initialization and then spawns new particles as needed to replace old ones.

Particles are spawned from the mesh using the source position map generated by SMRVFX with an initial velocity set by a source velocity map. The lifetime is set to a random number between a maximum life setting and half that (typically set to 10 seconds to produce particles with lifetimes of 5-10 seconds). The colour is initialized to black which effectively makes this additive rendered particle’s default appearance invisible to allow for later control of its appearance. The spawn and initialize graph are shown in figure 9.

Update

The update portion of the VFX Graph shown in figure 10 runs every frame and updates all of the particles simultaneously on the GPU. First a turbulence force is applied to add some variation and movement to the particles, giving the appearance of wispy smoke, then a small amount of drag is applied to slowly bring the particles to rest and prevent runaways. These parameters were based on those used in the SMRVFX demo as they produced ideal results “out of the box.”

Target Position Attraction & SDF Control

Next, a target position is set based on the target object’s position map. This target position allows for the calculation of an attraction force based on their distance from the target. As this produces fairly stagnant results on its own, Conform to Signed Distance Field (SDF) is used to cause the particles to be attracted to, and then stick within a certain distance of the mesh. MeshToSDF is used to calculate the SDF which is fed into the Conform to SDF block and the settings are exposed so they can be modified directly from other scripts and within the editor. The SDF has to be offset with the mesh so the position of the Mesh’s origin is fed into the block as well. The settings of the SDF attraction force were optimized by iterating through a range of parameters and have been exposed for direct control from a script.

Output

The Particles-Attract.vfx VFX Graph in Body RemiXer uses a Quad Output which uses an additive sprite renderer shown in figure 11. Source and Target colours are fed into a custom made “Particle over Lifetime” module which are then blended together. Some colour dynamics are added and multiplied from the “Make Pretties” module, and then the particles are scaled based on their age and velocity.

Particle Over Lifetime

The Particle over Lifetime module takes all of the input colours and processes them depending on the remixer mode. If the remixer is in average mode the target colour is set to a random one of the currently tracked bodies. Otherwise the target colour is simply set to match that of the target body. The colours hue and saturation values are then jittered before being fed into the blend color module. A source sample curve and target sample curve are used to set the blending value over the lifetime of the particles.

Make Pretties & Scale

Make Pretties adds variation to the particle colours using Perlin noise based on the particle’s current position and the current simulation time. The Perlin noise is used to sample a gradient which shifts the colour towards a purple or magenta hue. It is also used in combination with a sine wave to sample another gradient that multiplies the particles’ brightness to vary them between 75% their nominal brightness to 200%. The Scale module sets the length of the particles based on their velocity and sets their width based on their age mapped to a source and target blend curve. Both are shown in figure 13.

User Experience Design

Body-RemiXer was designed to provide an inviting an playful user experience, where participants can discover how the system works themselves through interaction. The visual representation of a participant was designed with a particle system to provide a magical, ambiguous but still believable and self-relevant experience. Particles follow rules of physics, which results in them leaving traces from movement, escape beyond the boundaries of the body and increase or reduce concentration in different areas depending on the speed and coordination of movement. This open but still somewhat predictable interaction encourages a playful exploration of various movements minimizing the feeling of self-consciousness by participants, experienced when having to playfully move in a public space.

We used an androgenous model to facilitate association with the virtual representation and to avoid any feelings of “otherness” or gender-swapping as these, although interesting, would bring other implications to the experience beyond the desired connection. This was a very important design consideration that we experimented with trying both female and male bodies to confirm our suspicions. In pilot testing female users noticed the masculinity of an overly broad and muscular body while both female and male users noticed an overly stereotyped curvy female body. As such we designed the model to remove as many identifying features and to allow it to conform as closely as a single body could to a wide range of body images. The chest, hips and calves seemed to be the strongest indicators, so these were normalized to avoid any clear suggestion but retained characteristics of both feminine and masculine bodies.

The particle system was designed to encourage slow, thoughtful movement by showing a clearer figure with slow movements while disappearing into a more amorphous shape as movement energy increased. This was also designed to allow for an extension of expressive movement beyond the bodily form, to transition to a representation of the body’s energy rather than form.

Body RemiXer was designed to work with as many bodies as the Kinect V2 could track. To avoid confusion between bodies each participants is given a different particle colour. The particles vary from the colour of the source body to the target body, or in the case of the averager they randomly select one of the active colours as a target colour showing all of the bodies which are affecting that one. The colours were selected for their harmony with other colours while maintaining sufficient distinction from eachother by having their hue spread across the colour wheel. The order of the colours is:

1.Turquoise, 2.Rose, 3.Purple, 4.Amber, 5.Blue, 6.Lime.

The system was also designed to automatically advance through a series of 5 modes after a set period of time (currently set to 2 minutes for demo purposes) to allow particpants experience multiple body sharing experiences. The design of each mode is detailed below.

Mode 1: Exchange

Exchange mode is the most basic mode of interaction designed to acclimatize the participant to the experience and their virtual representation while beginning to form connections with other participants through the trail of particles exchanging between them. The movement of particles emulates a sharing of bodily energy flowing between pairs of participants.

Here, participants start their experience noticing the reflection of their bodies represented with particles and develop the sense of body-ownership of the reflected virtual body, noticing how it matches their movement. Participants pivot around and experiment with some basic movements to try out what their new body can do and develop an understanding of its spatial representation. A solo participant would see their particles flowing out and then gravitating back towards their own body. Then, as others join in, the participants notice that some of the particles their body is constituted from escape and fly over to the next body of a different colour representing another participant. Another trail of particles from that body comes back in return and start to fill up the participant’s body with new colour. Participants identify that their are bodies are now “connected” in a literal sense through constantly exchanging particles constituting their bodies.

From our user tests and personal experimentation we did observe that this interaction does create a feeling of connection, but because the participant didn’t choose to connect himself and he cannot escape the particle exchange, it may be perceived a little uncomfortable and invasive if the participant is not ready. In the future we will create a smoother transition where depending on the physical distance between participants particles will either be more or less strongly attracted.

Mode 2: Swap

As in Exchange mode, in the Swap mode participants also get matched with another participant and see their body being controlled by their partner as well as their own body (to support the sense of body-ownership). This mode highlights the differences between participants’ movement and can encourage them to coordinate with each other. If they move synchronously, it will produce a coherent representation of one person, or they can play off each other’s movement creating a “dance” between two overlaid bodies. In Swap mode, the particles travel from the participant’s body to the overlaid swapped body, outlining both and suggesting difference through the trails of particles travelling between outlines.

Mode 3: Average

In the Average mode participants together control an averaged “third-body.” They see the averaged body overlaid onto their own on one of the projections and a separate third-body on another screen. The trace of particles flow from participants bodies towards their locally superimposed average to suggest any divergence from the average. By moving together participants create one body that represents the average of all of their body movement. This interaction could naturally encourage participants to start moving closer to how the third-body moves, thus eventually moving together in sync. The average body behaves somewhat unpredictably with large divergence and will precisely match a well coordinated group, encouraging participants to move together and adopt the same poses.

Mode 4: Exquisite Corpse

Figure 19. Exquisite Mode Projection 1

Figure 20. Exquisite Mode Interaction

Figure 21. Exquisite Mode Projection 2 (Third-Body)

Exquisite Corpse mode was inspired by the surrealist practice of the same name. This practice involves each artist drawing a portion of the body to form a complete but often bizarre artwork (Tate, n.d.). Similarly, participants each have a control over a part of the third-body (e.g. upper body and lower body or singular limb, depending on the number of participants). Thus, their movement is directly represented in the third body, unlike in the Average mode. Here, at first participants test out moving different parts of their body to discover which body part they have control over. After exploring the range of motion they can choose to collaborate with the other participants by producing consistent movement playing off each others ideas.

Here, each person has a copy of the resulting exquisite corpse body overlaid onto their local representation and there is a third-body representation on the second projection. Particles flow from participants own body, to their local representation and then subsequently to the shared third-body. This provides some level of local feedback while forming a sense of ownership over the third-body through the puppet-string-like streams of particles flowing towards the third-body. The particles mix together forming patchworks of different colours to indicate participants control over this shared body.

This mode gets especially challenging with more than 4 people. It becomes harder to discover which body part each person controls and harder for everyone to coordinate as the feedback loop between participants becomes longer here. Each person needs to first interpret the observed movement made by someone else and then decide on what would be a good match for it and then implement it, after which other people will be interpreting and responding to this movement. This evokes some of the same surrealist qualities as the drawn exquisite corpse in which each artist was not permitted to look at what the others had drawn.

Mode 5: Shiva

Finally, in Shiva mode, the third body has multiple limbs each controlled by each participant. Participants do not have a local representation but instead have their bodies overlaid together in the third-body. Particles flow from each participant’s body to the part of the Shiva which they control, indicating a sense of control and giving feedback as to which part they are controlling while maintaining the sense of shared ownership over one many-armed body.

This is the fastest mode to discover and interpret out of the third body modes as it allows for the clearest feedback. It encourages participants to move in coordination with each other but not necessarily in perfect sync, as participants tend to want to emphasize the many-armed image. Instead of directly matching each other, this mode encourages participants to respond to each other’s poses, producing harmonious forms.

Discussion

Outcomes

Through the process of developing Body-RemiXer and experimenting with it, we made the following observations:

- Gender is an important parameter of a virtual body that gets quickly picked by participants and can feel dissonant and distract from the experience. Thus, a gender neutral virtual body, was an essential, but challenging part of the development process.

- Using solely a particle system to represent one’s virtual body creates a compelling ambiguous image, with an interesting movement interaction, however this comes at a cost of integrity of the virtual body structure, as bodies “disappear” with fast movement. Finding the right balance between coherency and stability of the representation and its fluidity is an important challenge.

- When mixing virtual bodies and using a mirror representation it’s useful to keep some more or less clear representation of the original body to help participants understand what they are controlling (especially when it changes between different modes of interaction). This likely would be less important when using virtual reality headsets, as the virtual representation of an individual’s body will be more directly connected to their view point; however, a personal representation of the body might create a strong sense of connection when they look down and observe it as their own.

- Participants interacting with the system have two distinctly different forms of engaging with the system: (1) an individual interaction, where participants are most interested in discovering how their movements affect their virtual body, (2) a group interaction, where participants are focusing on the shared or other body and explore how they can effect that representation. Both of these interactions are exploratory and playful with an outward attentional focus. However, only the second mode of engagement involves collaborating with other people, and it seems to encourage a more creative mode of interaction, where the participant uses his body movement to “make” another body move. While in the first mode of attention there seems to be a stronger sense of individual representation and body ownership and the interaction is more similar to dancing in a way that participant explores their own body and movement. The challenge now is to naturally merge theses two modes of interaction and encourage the feeling of body ownership over the “shared body”. This likely would be easier to do in a VR headset, but achieving it in the projection based display should also be possible.

- Being somewhat forcefully connected to another person through a virtual representations may feel uncomfortable, and if it is not done through participants’ own will and action, the desired feeling of connection may not be achieved.

- The development process of Body-RemiXer allowed for some unexpected outcomes to be integrated as part of the final project. One example is a levitation of a virtual shared body that happens when one participant squats down, that instead of lowering the shared body to the ground, lifts its legs up to the torso making it levitate. When we discovered this “bug” through interaction we decided to keep it, as it produces an interesting unexpected effect that is fun to discover for participants and goes well with the general magical and etheral image of the the shared body.

Applications

The Body-Remixer is primarily designed as a public artistic installation and as a research prototype. As a public installation it will be shown in public spaces as an artistic installation exploring embodied playful form of interaction between people mediated through technology. Currently it is submitted to The Fun Palace that is held at the Centre for Digital Media in partnership with Cybernetics Conference.

As a research prototype, this system will be used in studies exploring how technology can support the feeling of connection between people and self-transcendent experiences. This research will explore questions related to the relationship between our bodies, their boundaries, and the sense of identity formed through their interaction.

In addition, the Body-RemiXer may be useful in many other applications. For example, it could be used at music events to provide guests with interesting visual feedback of their collective movements. Alternatively, it could be used in a children’s hospital to provide kids with an engaging way to play and connect with others while they are waiting, encouraging them to move around.

In a research context, Body-RemiXer can also be developed further to study how we can form connections with new people, while avoiding appearance-based biases and only interacting through ambiguous particle-based representations (for this study all participants will need to wear HMDs). Or, how we can design a telepresence system supporting connection between people over distance in a novel way. For instance, this can be studied by setting up two networked public installations and match participants in pairs across distance.

Study plan

In the future we will use this system as a public installation, as well as to run in-lab studies. The public installation will also be accompanied by a mini study to enhance the ecological validity of the collected data outside of the lab setting.

Research Questions

- Can participants experience shared embodiment with another person – embody another body together? Can they feel body ownership over that body?

- What effects would a shared embodiment have on them individually and their relationship with each other?

- How can the feeling of connection between people be supported though encouraging them to move together?

Since this project explores a novel phenomenon, a virtual experience that is different from what we get to experience in everyday life, we will first be doing an exploratory qualitative study in order to understand what the important aspects of the individual phenomenological experience of going through such an installation are. We will also relate these aspects of the phenomenological experience to elements of the experience design that might have triggered or supported the user experience. Since there is little research in this specific field at this moment, we are limited in our ability to generate testable hypotheses that can be formed prior to the data collection and evaluated with a randomized controlled experimental design. Instead, our first exploratory study will aim to create a rich understanding of the participants’ experience, from which future hypotheses can emerge. However, even in the absence of strict hypotheses, we have some prior expectations that emerge from our design process and design hypotheses (not to be confused with scientific hypotheses). These design hypotheses include (but are not limited to):

- Participants will develop a sense of body ownership over the virtual mirrored representations of themselves consisting of particle systems.

- Seeing themselves as floating and moving particles will on some level effect/alter participants’ perception of themselves.

- Having particles flow between two participants will form a virtual connection between their virtual bodies that will have a transfer effect on the connection between the two participants, having an effect on how they perceive their relationship to each other.

- Some modes of interaction (e.g. average) will encourage participants to synchronize their movement with each other, that could become a mediator of the strengthened feeling of connection between participants.

- Through controlling the movement of a shared body, participants will be able to develop a sense of body ownership over a shared virtual body. This sense of body ownership will be different from the one described in (1), as here the participants will feel that they own this body together and they are united in this way.

- Transitioning between different virtual bodies through flowing particles will support the feeling of self-transcendence.

For the first exploratory study we will only use qualitative measures to capture the phenomenological experience of participants. We will invite 10 pairs of naive (not informed about the research questions of the study) participants to interact with the Body-RemiXer that would automatically advance through each of the interaction modes giving 3 minutes for exploration of each mode. After the interaction, we will conduct open interviews with each individual separately. Below we list some exemplar interview questions we will use as a guide.

Interview Guide:

- Can you describe what was your experience like interacting with Body-RemiXer? How did it evolve? Walk me through it step by step.

- What did you think of how you were represented in/ effecting the virtual world?

- How did you feel in the experience? What kind of thoughts or feelings came up? At which moments?

- How were you moving through space? Why were you moving that way?

- What did you think of the other person? How would you describe your interaction?

- Were there any moments that stood out to you in your experience?

- Where can you see this system being used and what for?

- Any other comments?

Additionally to the open interviews we will perform micro-phenomenological interviews with 6 of the participants. Micro-phenomenological interview will allow us to explore the most significant moments of the experience that participants had with high level of granularity. This rigorous qualitative interview method assists participants in re-evoking their past experience and discovering pre-attentive aspects of their experience. This method has two important advantages over regular open interviews:

- It allows for the separation of what participants might be theorizing about their past experience from the description of the actual phenomenological experience. This would be especially important when testing a developed interactive system, where participants will likely be biased to describe the user experience in the way that they think would match the developers expectations

- The interviewer can assist the interviewee in re-orienting their attention to different dimensions and pre-attentive aspects of their experience. This is useful in context of testing digital system to be able to collect descriptions of the actual phenomenological experience as opposed to feedback on the prototype. As Body-RemiXer is designed for an embodied interaction and a lot of embodied experiences in our day to day life remain pre-attentive, the interviewer needs to be able to assist the interviewee at accessing this dimension of the experience that they may not have originally paid attention to.

As the micro-phenomenological method is very labour intensive to conduct and analyze and it produces very detailed descriptions, we will only use this method with some participants at this stage of the project.

Through this first exploratory study we will have a better understanding of what kind of experiences Body-RemiXer affords and what design elements might support or trigger them. This will inform the future iteration of the system and more specific research hypotheses that we could test in a controlled experiment.

In the next study, we can start integrating quantitative measures that can assess the feeling of connection between participants. To achieve that, we will use observational measures of movement to measure movement synchronization as well as physiological measures of breathing and heartrate to measure physiological synchronization. Synchronization or attunement movement and physiological activity have been shown to be correlated with the feeling of connection between people (Marsh et al. 2009). This type of measures are advantageous over post-experiment measures as they capture temporal data that would allow us to align the dynamics of synchronization with the interaction with system and identify the moments (or rather periods) in which synchronization started increasing and relate it to what exactly participants were experiencing.

Another frequently used measure of connection is Inclusion of Other in Self (Aron, Aron, & Smollan, 1992) that consists of a set of venn diagrams representing “you” and “other” with different amount of overlap. We can use this measure at the end of the experiment to capture the overall level of connection between the two participants and compare it in a between-subject experimental design with a control condition (that will be informed by the previous exploratory study). We will also integrate a behavioural measure of collaboration (a correlate of connection) where participants will be invited to play a Public Goods game to assess how much they will be willing to collaborate with each other.

Future Work

- To create a stronger sense of body ownership over a virtual reflection, in future work it would be interesting to integrate body scans that create a representation of one’s body as a 3d scan of their actual body. Here, we can start with using a somewhat realistic representation and then slowly transitioning it to become more ambiguous to allow for mixing. This would make it easier for participants to identify which virtual representation is theirs and create a stronger feeling of connection with it. To accomplish this we plan to adapt Keijiro Takahashi’s RealSense point cloud to VFX converter RSVFX for the Kinect V2.

- We will develop gradual transitions between different modes that will hopefully step by step increase the feeling of connection between participants. First we need to give participants an opportunity to interact with the system with no mixing, but just to explore the representation of their movement with particles and develop an ownership over their virtual representation (a strong sense that what they see in the projection is “them”). Then we will start transitioning into modes of interaction between multiple participants first with just a few particles flying over and mixing to form the third body which would slowly start taking the form of Shiva, where each participant still fully controls their body and each of this movements directly affects a limb of Shiva. At this stage participants will start working together on creating a new virtual character, but each one of them will still have a clear sense of their identity in this process. Next we will transition into the exquisite corpse, where the Shiva will start taking a form of more human like body with a singular set of limbs, and while participant’s movement will still have a direct effect, they will only be controlling a part of the third body, which would require them to work together with the other participant and will encourage them to develop a sense of ownership of the rest of the body that is not controlled by them. Finally, the interaction will transition into Average, where participants will have control of all the movement, but it’s no longer direct.

- We need to explore opportunities of modifying interaction design to allow participants to choose to start mixing through connecting their particles (e.g. reaching out a hand towards achieve to create a flow between particles between two bodies).

- We will also integrate the use of HMDs for at first one and then multiple participants. This will require overcoming issues presented by the Unity High Definition Render Pipeline and setting up the system to work through networked computers.

- Update reflection tracking scripts to work with High Definition Render Pipeline

- We will add an ambient soundscape to the installation with some responsive sound being generated in reaction to participants’ movement.

- We will further develop the Kinect tracking to resolve some minor issues:

- Move kinect to the corner between two projector screens to optimize how bodies are being picked up by encouraging participants to face the Kinect.

- Fix the problem with the neck getting tilted away from the Kinect when the participants is not facing Kinect directly.

Team Member Contributions

John Desnoyers-Stewart

Roles:

- Creative Direction

- 3D Modelling

- Interaction Design

- Hardware Design

John Desnoyers-Stewart developed the Kinect body tracking code, performed 3D modelling of the avatars, and collaborated in the development of the particle system. Desnoyers-Stewart focused on the hardware and software integration as well as the aesthetics of the completed system. He designed the direct user interactions which were used to control the particle system. He conducted informal user-studies on early prototypes to ensure system functionality. Together with Stepanova he organized the physical set up for the installation in the Black Box.

Katerina R. Stepanova

Roles

- Research Design

- User Experience Design

Katerina R. Stepanova managed the project development to encourage results which would be applicable to a diverse audience and could be used in research. She developed the research design and the user experience design to encourage collaboration between immersants. Stepanova also researched alternative options for particle system development and collaborated in the development. She have also worked on networking that wasn’t yet integrated. She performed later informal user testing to iterate on the design, ensure desired user experience and generate preliminary research hypotheses.

Resources

Tools Used

- Model Creation

- Blender blender.org/

- Fuse adobe.com/ca/products/fuse.html

- Mixamo mixamo.com

- Game Engine

- Unity 2019.1

- High Definition Render Pipeline

github.com/Unity-Technologies/ScriptableRenderPipeline/wiki/High-Definition-Render-Pipeline-overview - VFX Graph Preview

github.com/Unity-Technologies/ScriptableRenderPipeline/wiki/Visual-Effect-Graph

- High Definition Render Pipeline

- Unity 2019.1

Assets Used

- Keijiro Takahashi: SMRVFX https://github.com/keijiro/Smrvfx

- Aman Tiwari: MeshToSDF https://github.com/aman-tiwari/MeshToSDF/

- Microsoft: Kinect SDK and Kinect for Unity SDK https://developer.microsoft.com/en-us/windows/kinect

- Unity Community Post: Averaging Quaternions and Vectorshttp://wiki.unity3d.com/index.php/Averaging_Quaternions_and_Vectors

- Mixamo Content Creator Package https://www.mixamo.com/#/software

References

Ahn, S. J. (Grace), Bostick, J., Ogle, E., Nowak, K. L., McGillicuddy, K. T., & Bailenson, J. N. (2016). Experiencing Nature: Embodying Animals in Immersive Virtual Environments Increases Inclusion of Nature in Self and Involvement With Nature. Journal of Computer-Mediated Communication, 21(6), 399–419. https://doi.org/10.1111/jcc4.12173

Aron, A., Aron, E. N., & Smollan, D. (1992). Inclusion of Other in the Self Scale and the structure of interpersonal closeness. Journal of Personality and Social Psychology, 63(4), 596–612. https://doi.org/10.1037/0022-3514.63.4.596

Banakou, D., Hanumanthu, P. D., & Slater, M. (2016). Virtual Embodiment of White People in a Black Virtual Body Leads to a Sustained Reduction in Their Implicit Racial Bias. Frontiers in Human Neuroscience, 10. https://doi.org/10.3389/fnhum.2016.00601

Bourdin, P., Barberia, I., Oliva, R., & Slater, M. (2017). A Virtual Out-of-Body Experience Reduces Fear of Death. PLOS ONE, 12(1), e0169343. https://doi.org/10.1371/journal.pone.0169343

Hamlin, J. K., Mahajan, N., Liberman, Z., & Wynn, K. (2013). Not like me= bad: Infants prefer those who harm dissimilar others. Psychological science, 24(4), 589-594.

Hollan, J., Hutchins, E., & Kirsh, D. (2000). Distributed cognition: toward a new foundation for human-computer interaction research. ACM Transactions on Computer-Human Interaction (TOCHI), 7(2), 174-196.

Markowitz, D. M., Laha, R., Perone, B. P., Pea, R. D., & Bailenson, J. N. (2018). Immersive Virtual Reality Field Trips Facilitate Learning About Climate Change. Frontiers in Psychology, 9. https://doi.org/10.3389/fpsyg.2018.02364

Markley, F. L., Cheng, Y., Crassidis, J. L., Oshman, Y. (2007). Averaging Quaternions. Journal of Guidance, Control, and Dynamics, 30(4), 1193–1196.https://doi.org/10.2514/1.28949.

Marsh, K. L., Richardson, M. J., & Schmidt, R. C. (2009). Social Connection Through Joint Action and Interpersonal Coordination. Topics in Cognitive Science, 1(2), 320–339. https://doi.org/10.1111/j.1756-8765.2009.01022.x

Oliveira, E. C. D., Bertrand, P., Lesur, M. E. R., Palomo, P., Demarzo, M., Cebolla, A., … Tori, R. (2016). Virtual Body Swap: A New Feasible Tool to Be Explored in Health and Education. 2016 XVIII Symposium on Virtual and Augmented Reality (SVR), 81–89. https://doi.org/10.1109/SVR.2016.23

Rosenberg, R. S., Baughman, S. L., & Bailenson, J. N. (2013). Virtual superheroes: using superpowers in virtual reality to encourage prosocial behavior. PloS One, 8(1), e55003. https://doi.org/10.1371/journal.pone.0055003

Tate Cadvre Exquis (Exquisite Corpse). Retrieved April 25, 2019, from https://www.tate.org.uk/art/art-terms/c/cadavre-exquis-exquisite-corpse

Tarr, B., Slater, M., & Cohen, E. (2018). Synchrony and social connection in immersive Virtual Reality. Scientific Reports, 8(1), 3693. https://doi.org/10.1038/s41598-018-21765-4

Yee, N., Bailenson, J. N., & Ducheneaut, N. (2009). The Proteus Effect: Implications of Transformed Digital Self-Representation on Online and Offline Behavior. Communication Research, 36(2), 285–312. https://doi.org/10.1177/0093650208330254Yee, N., & Bailenson, J. (2006). Walk A Mile in Digital Shoes: The Impact of Embodied Perspective-Taking on The Reduction of Negative Stereotyping in Immersive Virtual Environments. 9.